From Brittle to Brilliant: Why We Replaced OCR with VLMs for Image Extraction

In the world of blockchain intelligence, investigators often encounter critical information not as text, but as images such as screenshots of transaction confirmations, swap service receipts, or wallet interfaces. The speed and accuracy with which we can extract data like cryptocurrency addresses and transaction hashes from these images can have a major impact on the outcome of an investigation.

For years, Optical Character Recognition (OCR) has been the standard tool for this task. But as the crypto landscape grows more complex, the limitations of simply "reading" text have become increasingly apparent. This blog post details TRM Labs' journey from the constraints of traditional OCR to the nuanced power of Vision Language Models (VLMs) like Gemini 2.5 Pro, and how the key to unlocking their potential lies in the art and science of the prompt.

Key takeaways

- The prompt is your primary lever: The performance of a powerful VLM is directly proportional to the quality of its prompt. For robust data extraction, prompts must be specific, provide a clear output structure (like JSON), and include constraints to filter out noise.

- Evaluation turns experiments into tools: An AI model's true value is only proven through a rigorous evaluation framework. Hard metrics are essential to validate performance and ensure a solution is not just promising, but production-ready and reliable at scale.

- Security must be architected, not assumed: Integrating AI requires a "security by design" approach. Architectural safeguards, such as running workflows in isolated sandbox environments, are as critical as the model itself to defend against threats like data exfiltration and prompt injection.

{{horizontal-line}}

The OCR baseline: A good start, but not enough

When faced with the challenge of extracting data from images, our first instinct was to deploy OCR. It's a mature technology, effective at pulling text from a clear background. And initially, it worked — to a degree.

While it could read the characters, we quickly ran into three critical roadblocks:

- Lack of filtering: An OCR tool extracts all text indiscriminately. Our output was cluttered with irrelevant information like transaction amounts, network fees, confirmation times, and dollar equivalents. This noise required significant post-processing to isolate the data that mattered.

- Accuracy issues: The classic OCR errors such as mistaking an 'O' for a '0' or an 'I' for a '1' are minor typos in an email, but catastrophic for a 42-character hexadecimal address. A single incorrect character renders the entire address useless.

- Contextual blindness: OCR provides characters, not context. It couldn't reliably link a label like "From Address" to the string of characters that followed it. We were left with a jumble of text, unable to programmatically determine which address was the sender and which was the receiver.

.png)

The VLM promise: A glimpse of a smarter solution

These limitations led us to explore Vision Language Models (VLMs). Unlike OCR, which only sees characters, a VLM like Gemini 2.5 Pro possesses a deeper, more contextual understanding of an image. It can identify objects, understand layouts, and interpret the relationship between different pieces of information.

Our initial experiments were optimistic but simplistic. We gave the model a straightforward command:

{{from-brittle-to-brilliant-callout-1}}

The results were a step up from OCR, but still fell short of our requirements. The model would often miss one of the addresses, fail to identify the transaction hash, or return the data in an unstructured, unpredictable format. It was better, but not reliable enough for a production system. We learned an important lesson: a powerful model is only as good as the instructions it's given.

.png)

The art of perfect prompt: From good to great

The breakthrough came when we shifted our focus from simply asking for information to teaching the model exactly what we needed and how we wanted it formatted. This process of prompt engineering transformed our results from mediocre to near-perfect accuracy.

Here are the key principles we implemented:

1. Be specific and explicit

We stopped using vague terms. Instead of asking for "crypto addresses," we specified the exact labels we saw in the images.

{{from-brittle-to-brilliant-callout-2}}

This change immediately improved the model's ability to locate the correct data points.

2. Provide structure (few-shot prompting)

To solve the problem of unstructured output, we provided the VLM with an example of the exact format we wanted. By including a JSON schema in the prompt, we gave the model a template to follow.

{{from-brittle-to-brilliant-callout-3}}

The model now understood not only what to extract but also how to structure it, solving the label-value association problem that plagued OCR.

3. Set constraints

Finally, to eliminate the noise that OCR couldn't handle, we added negative constraints — telling the model what to ignore. This preemptively filtered the output, saving us valuable processing time.

Putting it all together, our final prompt looked something like this:

{{from-brittle-to-brilliant-callout-4}}

The results were transformative. The VLM now returned clean, accurate, and perfectly structured data, ready for immediate ingestion into our systems.

.png)

Measuring what matters: Our evaluation framework and results

At TRM Labs, we believe an evaluation framework is the most valuable asset in any AI system. An AI application is only as good as its ability to be measured, monitored, and evaluated. Before any solution is deployed, it must pass through a rigorous evaluation gauntlet to ensure it meets our exacting standards for accuracy and reliability.

To test our VLM-based extraction method, we curated a diverse dataset of 500+ real-world images, representing a wide range of layouts, fonts, and image qualities. Our goal was to measure two critical metrics:

- Accuracy: The character-for-character correctness of the extracted addresses and hashes.

- Consistency: The reliability of the output format for programmatic use.

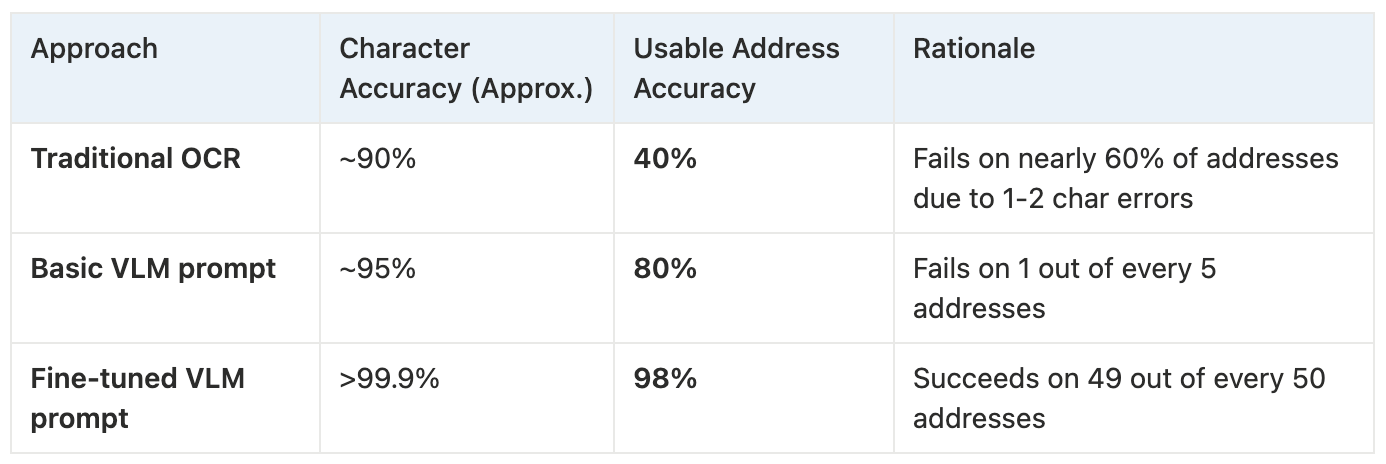

The results from our three-stage comparison speak for themselves.

Stage 1: The OCR baseline

When we processed the dataset using traditional OCR tools, the performance was simply not viable for a production system.

- Result: The usable accuracy was only around 40%, as nearly 60% of the extractions contained at least one character-level error in a crypto address (the classic 'O' vs. '0' or 'l' vs. '1' typos).

- Impact: This meant the data was fundamentally unreliable and would require manual human review and correction for a majority of the images.

Stage 2: VLM with a basic prompt

Next, we used Gemini 2.5 Pro with a simple, generic prompt like "extract the crypto addresses from the image." While an improvement, the results were still far below our production threshold.

- Result: The usable accuracy improved significantly to 80%. However, the remaining 20% of images still produced addresses with OCR-like typos, and the output structure was never consistent — sometimes it was a sentence, sometimes a list, and sometimes it missed data entirely.

- Impact: The lack of a consistent structure and the remaining 20% inaccuracies made this approach not reliable enough for a fully automated workflow.

Stage 3: VLM with our final, fine-tuned prompt

This is where the power of prompt engineering became undeniable. By providing a specific, structured, and constrained prompt, we achieved a transformative leap in performance.

- Result: We achieved 100% structural consistency, with every output delivered in the exact JSON format we specified. More importantly, the data itself was 98% accurate across all extracted addresses and transaction hashes.

- Impact: This level of accuracy and reliability immediately made the solution production-ready, turning a manual, error-prone task into a scalable, automated capability.

.png)

Table: Accuracy metrics at a glance

From evaluation to production

These results weren't just a successful experiment. This VLM-powered solution is now a core component of our data processing pipeline. It has maintained its 98% accuracy while successfully processing over thousands of images in production, proving its scalability and affirming our belief that with the right framework, AI can deliver truly reliable and transformative results.

The road ahead: Empowering VLMs with tools and techniques

The success of fine-tuned VLMs in outperforming traditional OCR is a significant milestone — but it's just the beginning. At TRM, we view innovation as an iterative process. Our next steps are focused on evolving this technique from a simple extraction tool into a more autonomous, resilient, and intelligent system by equipping it with new capabilities.

Here’s what’s next on the horizon:

1. Creating a self-correction loop with VLM tooling

The most promising immediate improvement is to grant the VLM the ability to verify its own work. We are moving towards a future where the VLM doesn't just extract data, but validates it in real-time by using external tools, much like a human analyst would.

The concept

We can provide the VLM with access to a simple but powerful tool: an isValidAddress() function. This tool can programmatically check if a given string conforms to the valid structural rules of a cryptocurrency address (e.g. passing an Ethereum address checksum, matching a Bitcoin address format).

The workflow in action

- Extraction: The VLM analyzes an image and extracts a potential crypto address, for instance,

0x08c.... - Tool use: Before presenting the result, the VLM internally calls the validation tool:

isValidAddress('0x08c...'). - Verification: The tool runs its checks and returns a

TrueorFalseresponse. - Self-correction: If the tool returns

False, the VLM understands it made a mistake. It can then re-examine the image with the new context that its initial reading was incorrect, potentially correcting a common OCR-type error (like 'O' vs. '0') on its second attempt.

The benefit

This creates a closed-loop, self-correcting system. It dramatically reduces the risk of hallucinations or simple extraction errors making it into our data pipeline. The model transitions from a passive extractor to an active, self-validating agent.

2. Revisiting the classics: Image pre-processing for enhanced performance

While VLMs are incredibly powerful at interpreting complex, "messy" images, we are exploring whether classic image pre-processing techniques — staples of the OCR world — can further boost their performance. The hypothesis is that by cleaning the input, we can improve both accuracy and speed.

The concept

Before an image is sent to the VLM, it would pass through an automated pre-processing pipeline. This could include steps like:

- Binarization: Converting the image to high-contrast black and white to make text stand out.

- De-skewing: Straightening text that is tilted or rotated in the image.

- Noise reduction: Removing digital artifacts or "speckles" from the background.

The potential benefits

- Higher accuracy: For low-quality or challenging images, pre-processing reduces ambiguity. A cleaner, clearer image makes it easier for the VLM to distinguish between similar characters, pushing its accuracy even higher on difficult edge cases.

- Improved latency: A simpler, high-contrast image may be less computationally intensive for the VLM to analyze. By reducing the "noise," the model can potentially arrive at a confident answer more quickly, which is a critical factor for building a responsive, production-scale system.

3. Enhancing our secure sandbox with proactive threat detection

Our commitment to security begins at the architectural level. As a baseline, all VLM workflows at TRM are already executed within dedicated, self-hosted sandbox environments. These isolated instances, powered by workflow automation platforms (like n8n, for example), operate with strict network egress rules and the principle of least privilege. This foundational security layer provides a powerful guarantee: even if a prompt injection attack were to manipulate the VLM's output, our architecture makes data exfiltration attempts impossible by blocking all unauthorized outbound connections.

While our sandbox provides a critical fail-safe, the next evolution in our defense-in-depth strategy is to add a proactive layer that neutralizes threats before they even reach the model.

The concept

Our next step is to build an intelligent "security gate" — a proactive threat detection and sanitization service that inspects all inputs. The hypothesis is that by identifying and disarming malicious payloads at the earliest possible stage, we can make our VLM workflows more efficient, resilient, and secure against a broader range of attacks.

This new layer will involve implementing techniques such as:

- Semantic threat analysis: Using another AI model to analyze the intent of a prompt, flagging instructions that are out of context or attempt to override the VLM's core objectives (e.g. "ignore all previous instructions...").

- Exfiltration pattern matching: Actively scanning for and blocking the known signatures of data exfiltration attacks, such as the use of Markdown image syntax with suspicious, non-whitelisted external URLs.

- Automated sanitization: Programmatically identifying and stripping out potentially malicious parts of an input before the "clean" version is passed to the VLM for processing.

The added benefits

- Early threat neutralization: This approach stops attacks before they consume VLM resources, making the entire system more efficient and robust. It prevents the model from ever being exposed to the malicious instruction.

- Defense against output manipulation: Beyond just preventing data theft, this layer can defend against attacks designed to simply corrupt the output (e.g. tricking the VLM into returning a wrong but valid crypto address). This protects the integrity of the data itself, which our exfiltration-focused sandbox alone may not prevent.

By building this proactive detection layer on top of our already-secure sandboxed architecture, we are creating a truly comprehensive, multi-layered defense that ensures our AI systems are resilient, trustworthy, and safe by design.

And by exploring this blend of classic techniques and cutting-edge models, we aim to build a pipeline that is not only intelligent but also highly optimized, leveraging the best of both worlds to achieve maximum efficiency and accuracy.

Security by design: Our approach to AI-powered intelligence

At TRM Labs, the integrity and confidentiality of data are foundational. Integrating powerful AI like VLMs into our intelligence pipeline follows a strict "security by design" methodology. This means we don't just use these tools; we embed them within a multi-layered security framework designed to protect data at every stage.

Here’s a look at the core security principles that govern our use of image extraction technologies:

1. Zero Data Retention (ZDR) and vendor partnerships

Our engagement with any AI model vendor begins with a non-negotiable security requirement: a Zero Data Retention (ZDR) policy, enforced by rigorous data privacy agreements.

- What this means: When we send an image to a VLM for analysis, the vendor is contractually bound not to log, store, or use our data for any other purpose, including training their future models. The data is processed ephemerally and is not retained once the request is complete.

- The impact: This policy is our first and most important line of defense against data breaches and privacy violations at the vendor level. It ensures that the sensitive information we analyze remains exclusively within our control, effectively creating a secure, stateless processing environment.

2. Proactive data minimization and de-risking

We adhere to a strict policy of not sharing customer-specific data with third-party AI models.

- What this means: The image extraction techniques described here are developed, tested, and validated using generalized, non-customer-specific data. This includes publicly available information and internally generated test cases that mimic real-world scenarios without using actual case data.

- The impact: This proactive measure fundamentally de-risks the entire process. By separating model interaction from confidential customer investigations, we ensure that sensitive case details are never exposed to an external environment, adding a critical layer of operational security and client trust.

3. Internal chain of custody

Even with ZDR in place, maintaining an immutable internal record is paramount for the evidentiary quality of our work.

- What this means: From the moment an image is ingested, our internal systems maintain a strict, immutable log — a digital chain of custody. We record the source image, the exact VLM version used, the specific prompt deployed, and the final verified output.

- The impact: This guarantees a fully auditable and reproducible trail for every piece of intelligence we generate, ensuring that our findings are not only accurate but also forensically sound.

By building this robust security posture — combining contractual ZDR, stringent data scoping, continuous model validation, and a rigorous chain of custody — we can confidently harness the groundbreaking capabilities of AI, without ever compromising on our commitment to security and trust.

Conclusion

Our journey from the limitations of OCR to the precision of fine-tuned VLMs highlights a fundamental evolution in how we approach automated data extraction. While the power of models like Gemini 2.5 Pro is immense, our success proves it is not a "magic box." True, production-ready capability emerges from a holistic methodology that combines thoughtful prompt engineering, rigorous, data-driven evaluation, and a security-first mindset.

By investing time in crafting specific and structured prompts — and validating our results with hard metrics — we built a system that is not just faster and more accurate, but is also scalable and trustworthy. Architecting this solution within a secure, sandboxed environment ensures that we can innovate safely. This dedication to leveraging AI responsibly and effectively is at the heart of what we do at TRM Labs — turning complex, unstructured data into actionable, reliable blockchain intelligence. And our work isn't done; we continue to push the boundaries by exploring ways to make these systems even more intelligent and resilient for the future.

Interested in solving complex AI challenges to build a safer financial system? See all of our open roles here.

{{horizontal-line}}

Article contributors

- Mohan Kumar | Business Process Automation Specialist, TRM Labs

- Rahul Raina | CTO and Co-founder, TRM Labs

Access our coverage of TRON, Solana and 23 other blockchains

Fill out the form to speak with our team about investigative professional services.